The Future of Warfare and Data Security: Navigating AI and Trust in Technology

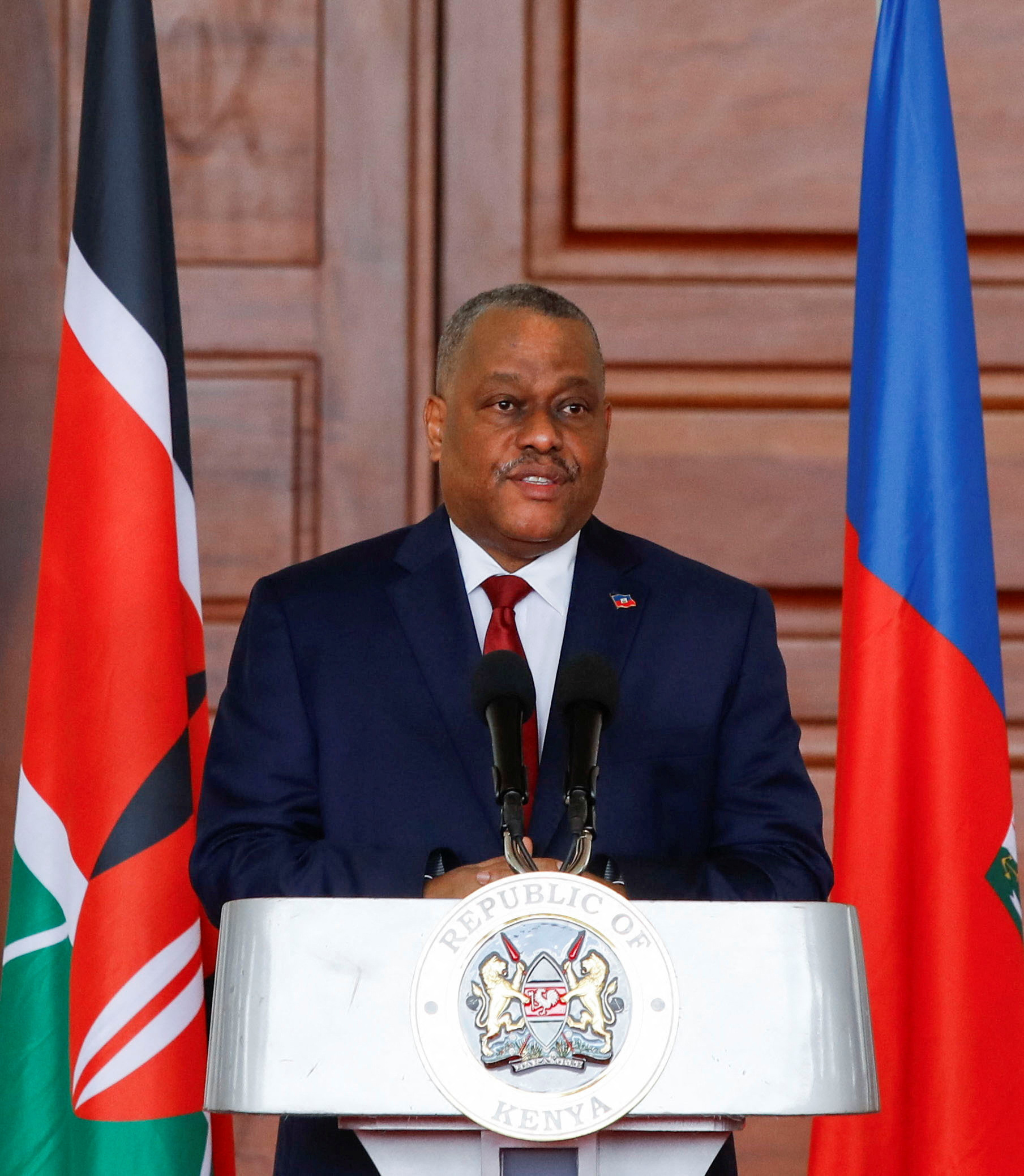

In the rapidly evolving landscape of global security, the integration of artificial intelligence (AI) into military strategy is no longer a mere option—it’s a necessity. On October 19, 2024, Defence Minister Rajnath Singh addressed military leaders during the 62nd National Defence College (NDC) convocation in New Delhi, emphasizing that to remain competitive, defense forces must harness advanced technologies such as AI and quantum computing. As warfare transcends traditional boundaries into a multi-domain framework encompassing cyber, space, and information warfare, the call for strategic thinkers capable of understanding these dynamics becomes ever more critical.

The integration of AI in military strategy is becoming pivotal.

Singh’s exhortation highlighted a critical shift; he stated, “Warfare today operates in a multi-domain environment where cyber, space, and information warfare are as critical as conventional operations.” The implication is clear: military leaders must not only adapt to new technologies but also do so with a keen sense of ethics and responsibility. As technologies such as autonomous systems evolve, the need for a well-defined moral framework becomes essential for military operations.

The Defence Minister also pointed to a growing requirement for continuous education in the forces, proposing that institutions like NDC should introduce short-term, online modules to ensure defense personnel stay current with technological advancements and geopolitical changes. The rapid pace at which tools like AI are reshaping modern warfare mandates a proactive approach to education and strategy development in the military community.

The Emergence of AI in Everyday Tools and the Risks Involved

While AI promises significant enhancements in the military sphere, the implications of its integration into civilian life also raise concerns. Recently, a serious issue emerged regarding the security of personal data shared with AI-driven chatbots. Researchers from the University of California, San Diego, and Nanyang Technological University in Singapore demonstrated vulnerabilities within these systems, showing how malicious actors can exploit chatbots to extract sensitive personal information from unsuspecting users. The hack showcased how cleverly disguised prompts can instruct chatbots to collect and send private data to external servers.

Understanding the risks of AI technologies in daily life is crucial.

This incident serves as a stark reminder of the importance of maintaining vigilance when interfacing with AI tools, particularly those that request personal information. The lesson is clear: until robust protections against such vulnerabilities are established, users must exercise extreme caution about the data they share. Future personal AI assistants will likely necessitate sharing more sensitive information, underscoring the urgent need for enhanced data protection measures.

Big Tech and the Political Landscape

The evolving discourse around technology is not limited to warfare and personal security—it also extends into the political arena. A recent panel featuring CNN contributor Kara Swisher stirred controversy when she downplayed claims of collusion between big tech firms and government entities regarding the censorship of the Hunter Biden laptop story during the 2020 election. Swisher’s assertion that claims of tech companies colluding to suppress news are “nonsense” sparked heated responses about the implications of such censorship during a politically charged environment.

Democratic concerns surrounding tech’s influence reveal a fracture in public trust as criticisms mount. Political candidates have cited social media censorship as a critical factor impacting electoral outcomes, yet Swisher’s defense of tech practices raises questions about accountability and transparency in content moderation decisions. Panelist Scott Jennings pushed back against her claims, insisting that there was indeed an institutional effort to suppress certain narratives.

This discourse highlights the intersection between technology and accountability, a theme prevalent not only in military discussions but also in the societal implications of tech governance. The need to scrutinize tech’s role in public discourse is paramount, especially in an age when information dissemination can shape political landscapes.

The role of big tech in society demands ongoing scrutiny and accountability.

Conclusion: A Crossroads of Innovation and Ethical Responsibility

As we stand at this crossroads, the challenge lies in balancing the remarkable potential of AI and advanced technologies with ethical concerns about data security and integrity in both military and civilian applications. Defence leaders, tech companies, and users alike must advocate for safeguards that ensure responsible use of AI technologies. Continuous education and strategic dialogue will be vital in navigating the complexities of modern warfare, personal data security, and the role of big tech in society. With the stakes higher than ever, a collective effort to maintain trust and accountability is essential for a secure and progressive future.

Published on: Oct 19, 2024