An adventurous soul with a knack for decoding tech jargon, our journalist wields a keyboard like a digital samurai, slicing through the complexities of coding languages with ease.

The Achilles’ Heel of Autonomous Navigation

In a groundbreaking study, researchers from the University of California, Irvine, alongside their counterparts from Japan’s Keio University, have brought to light significant vulnerabilities in the LiDAR (Light Detection and Ranging) systems employed by autonomous vehicles for navigation. This technology, which is pivotal for the operation of robotic taxis and consumer vehicles alike, has been shown to be susceptible to both “spoofing” and “vanishing” attacks, raising serious safety concerns.

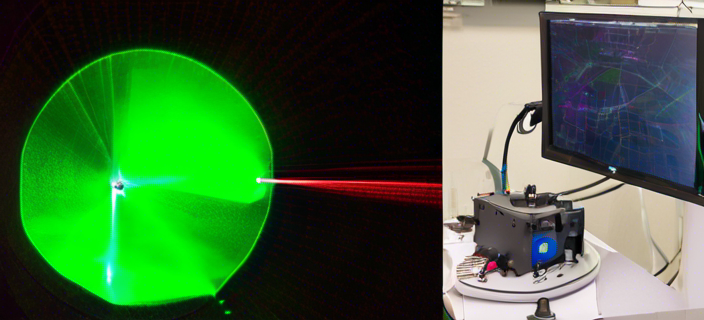

A glimpse into the experimental setup challenging LiDAR’s reliability

A glimpse into the experimental setup challenging LiDAR’s reliability

A Closer Look at LiDAR’s Flaws

LiDAR systems, which function by emitting laser beams to detect objects and gauge distances, are integral to the autonomous vehicles developed by giants like Google’s Waymo and General Motors’ Cruise. However, the collaborative research team has demonstrated that these systems can be deceived into either detecting non-existent obstacles or failing to recognize real ones. Such discrepancies can lead to unwarranted emergency braking or, worse, collisions.

“This is to date the most extensive investigation of LiDAR vulnerabilities ever conducted,” shared Takami Sato, a UCI Ph.D. candidate in computer science.

Through a combination of real-world experiments and computer simulations, the team scrutinized nine commercially available LiDAR systems. Their findings revealed that even the latest generations of LiDAR are not immune to sophisticated attacks, undermining the safety of autonomous driving technology.

The Experiment and Its Implications

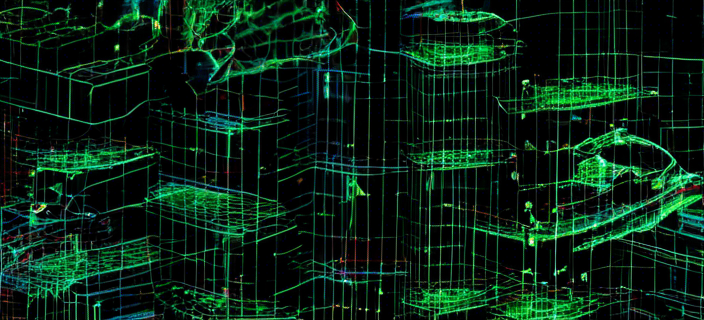

The researchers’ approach involved two distinct types of attacks. The first, dubbed “fake object injection,” tricks the LiDAR system into perceiving phantom obstacles, such as pedestrians or other vehicles. This type of attack primarily affects first-generation LiDAR systems. The second type of attack, capable of making real objects “vanish” from LiDAR’s perception, poses a threat to newer LiDAR models despite their advanced countermeasures like timing randomization and pulse fingerprinting.

The invisible dangers: How LiDAR systems can be fooled

The invisible dangers: How LiDAR systems can be fooled

Future Directions and Safety Measures

The revelations from this study underscore the urgent need for enhanced security measures in the design and manufacturing of autonomous vehicle systems. As Qi Alfred Chen, UCI assistant professor of computer science and senior co-author of the study, points out, these vulnerabilities could directly trigger unsafe driving behaviors, necessitating immediate attention from manufacturers and regulatory bodies.

The research, supported by the National Science Foundation, not only highlights the potential risks associated with current autonomous vehicle technologies but also paves the way for the development of more secure and reliable navigation systems in the future.

As autonomous vehicles continue to evolve, ensuring the safety and reliability of their navigation systems remains paramount. The findings from UCI and Keio University serve as a critical reminder of the ongoing challenges in the quest for truly autonomous driving, urging a collective effort towards more robust and secure technological solutions.

For more insights into the future of autonomous vehicle technology and the ongoing efforts to safeguard it, stay tuned to EMA – your source for tech news from around the globe.

Photo by

Photo by