The Rise of AI Bots: How News Publishers are Struggling to Protect Their Content

The tension between AI companies and news publishers has reached a boiling point, with Perplexity AI at the center of the controversy. The AI-powered search engine has been accused of plagiarizing news articles without providing proper attribution to sources. This has led to a wider debate about the methods used by AI companies to scrape online content and the measures that publishers can take to protect their work.

Perplexity AI: The New Kid on the Block

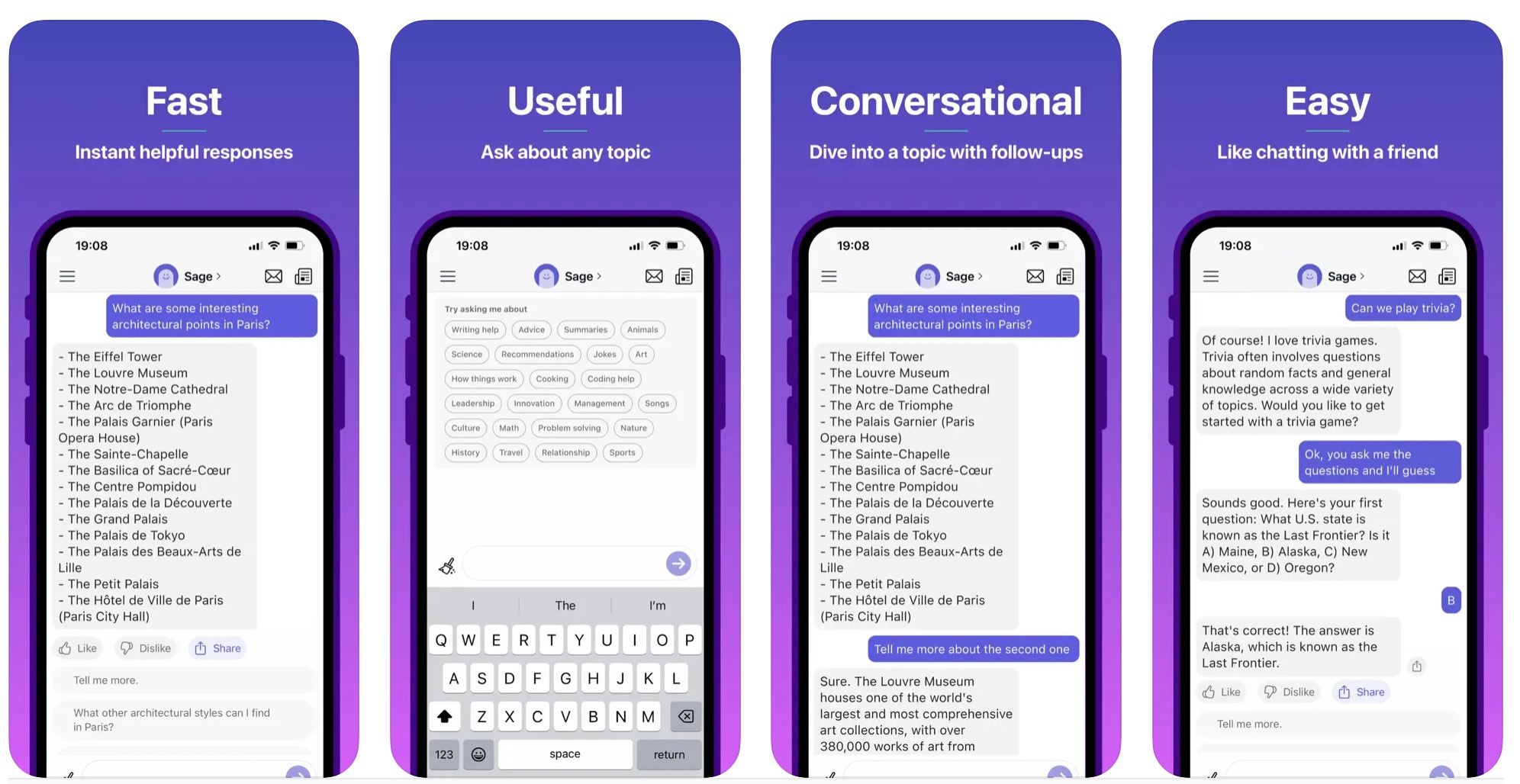

Perplexity AI was founded by Aravind Srinivas, an IIT Madras graduate who previously worked at prominent tech ventures such as Google, Deepmind, and OpenAI. The company’s mission was to disrupt the search engine market by providing personalized answers to users’ queries using AI. However, things took a turn for the worse when Perplexity AI rolled out a feature called ‘Pages’ that allowed users to input a prompt and receive a researched, AI-generated report that cited its sources and could be published as a web page to be shared with anyone.

Perplexity AI’s ‘Pages’ feature has been accused of plagiarizing news articles

Perplexity AI’s ‘Pages’ feature has been accused of plagiarizing news articles

The Controversy Surrounding Perplexity AI

Days after the rollout of ‘Pages’, Perplexity AI published an AI-generated report of an exclusive Forbes article about ex-Google CEO Eric Schmidt’s involvement in a secret military drone project. Forbes claimed that the language in its paywalled article and Perplexity AI’s AI-generated summary was similar, and that the artwork in the article had also been copied. The publication further alleged that Forbes had not been cited prominently enough.

Forbes accused Perplexity AI of plagiarizing its article

Forbes accused Perplexity AI of plagiarizing its article

Why Perplexity AI is Receiving Flak from Publishers

In addition to allegedly plagiarizing articles and bypassing paywalls, Perplexity AI has also been accused of not complying with accepted web standards such as robots.txt files. Robots.txt files contain instructions for bots that tell them which web pages they can and cannot access. However, robots.txt is not legally binding, which means that it is not much of a defense against AI bots that can simply choose to ignore the instructions within the file.

Robots.txt files are not legally binding

Other AI Companies Bypassing Web Standards

Perplexity AI is not the only AI company that has been accused of bypassing web standards. Quora’s AI chatbot Poe goes one step further than a summary and provides users with a HTML file of paywalled articles for download. Content licensing startup Tollbit has also said that more and more AI agents are opting to bypass the robots.txt protocol to retrieve content from sites.

Quora’s AI chatbot Poe provides users with a HTML file of paywalled articles for download

Quora’s AI chatbot Poe provides users with a HTML file of paywalled articles for download

How Can Publishers Block AI Bots?

The emerging trend of AI bots reportedly defying web standards and bypassing paywalled sites raises an important question: what other measures can publishers take to prevent the unauthorized scraping and use of their online content by AI bots? Reddit has said that in addition to updating its robot.txt file, it is also using a technique known as rate limiting, which essentially limits the number of times users can perform certain actions within a specified time frame.

Reddit’s rate limiting technique

Reddit’s rate limiting technique

There has also been a rise in the development of data poisoning tools like Nightshade and Kudurru, which claim to help artists stop AI bots from ingesting their artwork by damaging their datasets in retaliation.

Data poisoning tools like Nightshade and Kudurru claim to help artists stop AI bots from ingesting their artwork

Data poisoning tools like Nightshade and Kudurru claim to help artists stop AI bots from ingesting their artwork

Conclusion

The controversy surrounding Perplexity AI has brought to light the struggles that news publishers face in protecting their online content from AI bots. As AI technology continues to evolve, it is essential for publishers to find new and innovative ways to prevent the unauthorized scraping and use of their content. Whether it’s through updating robot.txt files, using rate limiting techniques, or developing data poisoning tools, the fight against AI bots is far from over.